Hackathon

The hackathon session was held on September 21st at the Sidewalk Toronto office at 307 Lakeshore Boulevard East, Toronto. The event started at 9:30 am and finished around 7:00 pm. 12 engineers from Sidewalk labs as well as 27 people from the community joined this event. The members of the community included engineers, designers, and people with lived experience of a disability. Two members from the accessibility team at Sidewalk Labs and five IDRC members facilitated the event. One Sidewalk Lab engineer was also available throughout the session providing assistance regarding required electrical components for each project.

Overview of the Day

Participants were divided into 8 groups with at least one Sidewalk engineer in each group. The participants were assigned to projects they had expressed an interest in during the invitation process.

During the morning session (10:00 am - 12:00 pm), groups had 2 hours to assign roles, develop a plan and start building their project. At each hour, groups were asked to update the progress status and notify facilitators if they required assistance.

After lunch and during the afternoon session (1:00 pm - 4:30 pm), groups had another 3 hours to work on their project. At 2:30 pm, groups were asked to share a quick update about their progress with the larger group and get feedback from their peers and the facilitators. Around 4:45 pm, groups started giving out their final presentations.

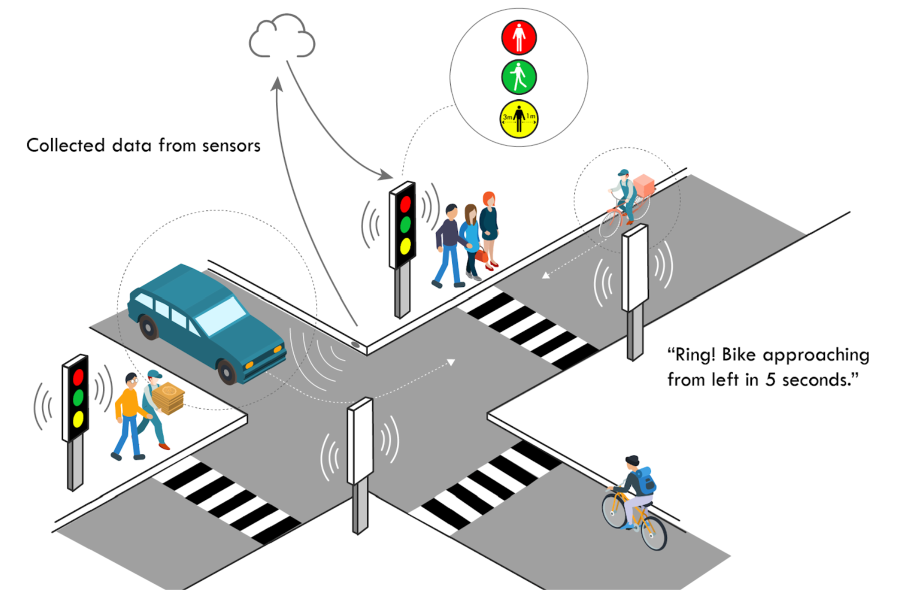

Hackathon Project #1: Audible or haptic incoming traffic warnings at intersections

Team’s Project Description:

An occupancy sensor (pressure sensor, camera, etc.) in the street that lets people know about someone approaching on a bicycle or car if they’re preparing to cross the street and / or currently on the street.

Sensors installed at intersection corners near crosswalks measure incoming traffic and their relative velocity. A honk or ring sounds at current crosswalk signs when an object passes by the sensor. The sound will change based on the speed of the object; faster objects will trigger a honk whereas a slower object will trigger a ring. Additionally, coloured lights located at the same crosswalk signs will trigger similar information; yellow light for slower objects and red for faster objects.

Hackathon Project #2: Tranquil Refuge

Team’s Project Description:

A place where people who need a physical or sensory reprieve from the environment can go to rest and recharge. Potential to give priority access to people with accessibility needs.

This project proposes a semi enclosed space with options to book fully enclosed rooms. The semi- enclosed space is similar to a courtyard that provides shade, water and seating areas. Each room is climate controlled and gives the user options to change the room environment, such as temperature, sound, and lighting. Each room has a place to lie down, a plant and calming walls. Rooms can be used through voice activation or through a touch screen connected to Google Home. Rooms can be booked through an online system, accessible outside the rooms, and indicators outside the rooms show if the room is currently in use and times it has been booked. Settings are on a time of 20 minute increments. Users in the room are required to reconfigure their settings if they choose to continue to use the room up to a maximum of 60 minutes. After 60 minutes, users must exit the space and re-enter a room in order to continue using the room. If a user has not left after 90 minutes, management will be called to come and check up on the person. Each room is equipped with a emergency phone.

Hackathon Project #3: Construction Advisory Beacon Messages

![]()

Team’s Project Description:

An advisory message sent from a beacon informing people of construction impediment(s) on the path.

This project proposes a new feature integrated with BlindSquare that users currently installed traffic cameras to identify areas with construction through image identification scripts. This information will be sent to BlindSquare users by describing where in the intersection there is construction with the option to “shake for more” for more details regarding the construction area. Additionally, this team proposed working with the City of Toronto to implement a new bylaw for construction sites to include beacons around their site. Staff will be required to view the images identified as construction areas and tasked with writing detour instructions for BlindSquare users.

Hackathon Project #4: Self-driving cars for sub-emergency medical visits

Team’s Project Description:

A dependable audio interface for ordering non-emergency medical transport.

This project proposed an app that can be accessed through google assistant or siri. The app could be activated through one action activation, and walks the user through a series of questions to determine the level of assistance necessary. First the app confirms whether or not it is an emergency. If it is an emergency, the app will call 9-1-1 for the user. If not, the app will confirm your location and call an autonomous vehicle (AV) to the closest urgent care center. The app gives the user the option to receive more information about their pick up and whether the user wishes to send information regarding their situation. It will give the user instructions for how to access the AV coming to pick them up such as ways to identify the vehicle and the estimated time of arrival. The AV is a large platform with wheels and glass walls to enable wheelchair users to easily wheel into the vehicle. Working together with the AV are a series of robots that fold flat and unfold into a wheelchair to help transport users or the users’ stuff from their home into the AV.

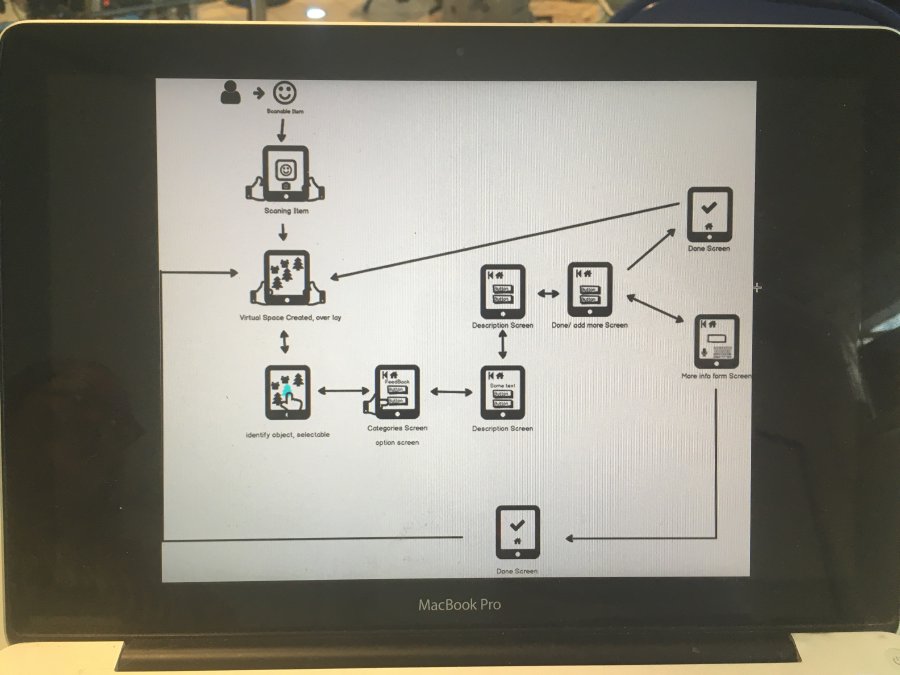

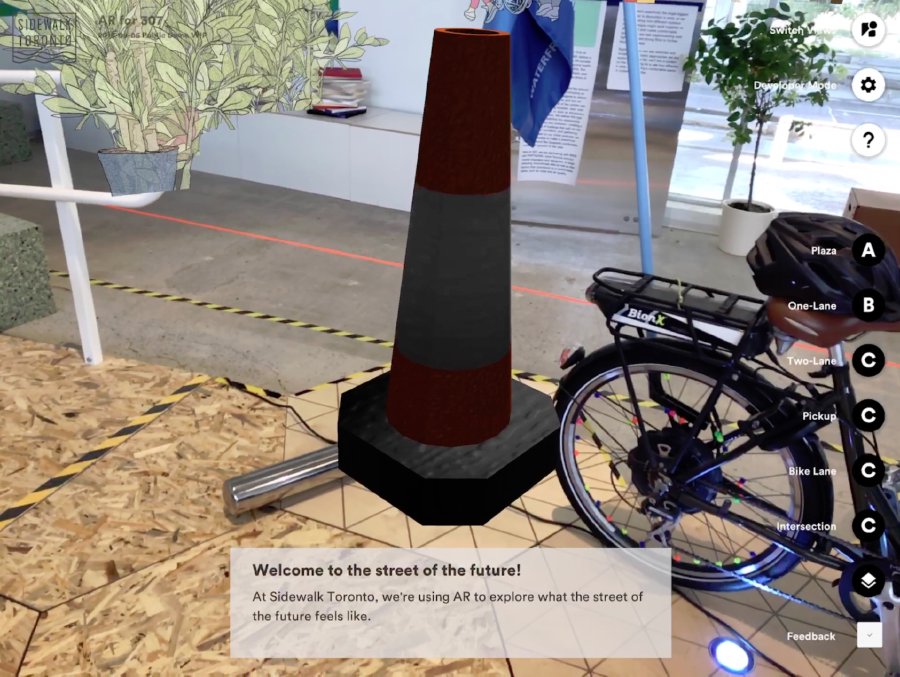

Hackathon Project #5: Augmented reality park feedback experience

Team’s Project Description:

Use of augmented reality (AR) to provide actionable information about public park features and amenities. Additionally, provides user a means of reporting maintenance issues within the park to responsible parties in an accessible, easy manner.

Users can explore a public park with AR using an iPad. Key features can be selected by user and the AR app will provide an audio and text description. Additionally, users can report issues and provide feedback through the app by placing an AR pylon. After placing an AR pylon, the app guides the user through a series of questions to describe the issue and determine the responsible party. Afterwards, the information is submitted to the responsible parties and users are given the option to give more feedback or return to AR exploration. The data collected from the AR pylons are compiled in a CSV database. The data includes: date, time, location (latitude and longitude), screenshot from the app of the area identified as an issue, and feedback information.

Hackathon Project #6: Rumble pavement

Team’s Project Description:

A dynamic audible/haptic indication of where the sidewalk (pedestrian-only section) ends and potentially dangerous bike or vehicular traffic starts.

The pavement nearing the edges of pedestrian throughways are equipped with speakers beneath the surface. The speakers produce a pulsing pattern through the subwoofer, creating an audible sound and haptic vibration. As pedestrians walk closer to the edge, the pulsing rate increases until it changes to a constant indicator at the edge. Visual indicators such as coloured lines and lights are also present: spaced out red lines indicated an upcoming edge, closer yellow lines with lights indicated that you are approaching the edge, and very close raised yellow lines indicated you have reached the edge.

Hackathon Project #7: Tactile Sidewalk Wayfinding Strip

Team’s Project Description:

A continuous tactile path on all sidewalks that provides wayfinding to and contextual, place-based information about the nearest transit stop.

Raised indicators in the shape of arrowheads/chevrons provide tactile feedback on the directionality towards the closest transit stop. Inner edges are raised more sharply to help cane users feel the shape of the indicators in the intended direction. Along the middle of the shortest path is a groove for cane users to follow and allow for a visual wayfinding cue. Edges of intersections are indicated with raised dots, matching the current system of tactile sidewalk cues. Indicators for turns are marked with a connected raised strip. The number of consecutive chevrons indicates the relative distance from the transit stop, with more chevrons indicating more distance from the stop.

Hackathon Project #8: AI Floor Describer

Team’s Project Description:

Auto-generate an audio description of a one-floor floor plan, including the elevators / stairs / washrooms / seating area / doors.

This project proposed an app [to integrate with BlindSquare] where floor plans can be uploaded and features can be identified using Microsoft VoTT. Once features are identified and categorized, descriptions will be automatically written for users to help navigate the area. The app will describe the direction the user is facing and key features near the user. The app has options to generate best routes based on accessibility requirements for the user such as ensuring clear paths with ramps for wheelchair users, or the shortest path with few obstacles for blind users.

Wrapping Up

The event was concluded by a voting process. Each participant was given three sticky notes. They had the option to vote for any project and as many times as they preferred, however, they could only vote for their own project once. The winning projects were the Tranquil Refuge and the Rumble Pavement with 14 votes each.

- Hackathon Project #1: Audible or haptic incoming traffic warnings at intersections - 8 votes

- Hackathon Project #2: Tranquil Refuge - 14 votes

- Hackathon Project #3: Construction Advisory Beacon Messages - 8 votes

- Hackathon Project #4: Self-driving cars for sub-emergency medical visits - 8 votes

- Hackathon Project #5: Augmented reality park feedback experience - 9 votes

- Hackathon Project #6: Rumble pavement - 14 votes

- Hackathon Project #7: Tactile Sidewalk Wayfinding Strip - 6 votes

- Hackathon Project #8: AI Floor Describer - 6 votes